Nvidia wants to build Omniverse

The world's most powerful AI supercomputer Eos will help

Facebook would prefer to lock its users into Metaverse's virtual world, but more and more global players are leaning in a different path.

In the shadow of a massive social network, technology is emerging that aims to build a digital copy of nearly everything, from replicating physics, chemistry, and biochemistry at the most basic level to creating a macroscopic digital twin of the entire globe - Earth 2.

Of course, we're referring to Nvidia's Omniverse environment, which aspires to be a kind of integrated internet platform for universal simulation and VR.

A simulation that runs both on a powerful local server and in the cloud.

The early signals a few years ago expected simply a simple interconnection service, with which a group of graphic designers at the home office will design and draw any vase, but significant firms such as Ericsson and BMW joined last year.

Omniverse is progressively beginning to embrace significant engineering software vendors, such as Bentley, so that finished models may be simply transferred to its standardized VR today, from any bolt to the entire house.

Then, simply attach machine learning technologies to Omniverse, and a virtual villa somewhere in the virtual suburbs of the virtual city will begin to drive, say, your prototype of an equally virtual robotic vacuum cleaner.

And because we're in VR, where time moves at a slightly faster pace, as the GPU and CPU farm will allow someplace in the data center or at your corporate supercomputer, the hypothetical model of the new BobikLux vacuum cleaner will be ready in a fraction of the time. Simply because he tries to vacuum 8,356,452 virtual room versions in Omniverse with 882,725 carpet, tile, and vinyl floor variants.

Today, if ARM, Nordic, and many others build chips without a single integrated circuit factory — final production will be handled by a factory in Southeast Asia — Omniverse can adapt this (fabricless) fabless approach into even more sophisticated scenarios.

In brief, once your vacuum cleaner fulfills all requirements, submit its designs to someone in Asia via Omnivers, and in a few weeks you will receive a box with a prototype that will work on the first good.

Omniverse will require extremely powerful computational power in order to function properly one day and not simply produce nice case studies from a few brands.

Fortunately, Nvidia doesn't make pots, but rather extremely powerful chips, so its CEO, Jensen Huang, was able to dazzle the crowd with a slew of new data center iron at GTC's opening address.

It is built on modern GPUs for machine learning and H100 neural networks using the Hopper architecture.

The processor has 80 billion transistors and can carry up to 4.9 gigabytes of AI data per second - enough to power all of the Omniverse's virtual vases, towns, and robotic vacuum cleaners. All of this at a computation speed of up to 4 PFLOPS - 4 billion AI operations per second with 8bit real values.

Similar mainframe supercomputers attained comparable performance in traditional mathematical operations (not AI) a few years ago, and now one dedicated GPU can do it. Of course, this is insufficient for a complete simulation of Omniverse.

What if we put numerous H100s on a single server module? That's a great idea! The new version of the Nvidia DGX H100 supercomputer arrives in front of our eyes. It has eight GPU H100s, 640 billion transistors, 32 PFLOPS of power for AI calculations, 640 GB of memory, and a peak memory throughput of 24 TB/s.

Stack the DGX cubes on top of each other, connect them with fast optics, and the DGX SuperPOD supercomputer with 20 TB of memory and 1 EFLOPS power for machine learning and neural networks appears in front of our eyes. Please, one trillion AI operations per second.

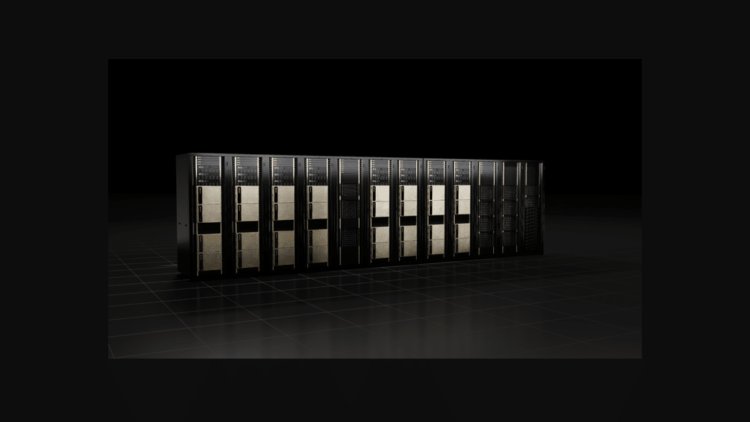

And what if… What if we put those SuperPODs right next to each other? With a touch of exaggeration, we finally have the brain of everything and a new reference machine for artificial intelligence - Nvidia Eos.

It will be the most powerful AI supercomputer in the world, with a respectable performance of 18.4 EFLOPS - 18.4 trillion AI operations per second - and will outperform the fastest general supercomputer in the world, the Japanese Fugaku, up to four times in these tasks.

Eos will be constructed later this year and will include 576 DGX H100 cubes and 4,608 DGX H100 graphics. The entire system is linked by a fast interface with a data throughput of 230 TB / s. This equates to about 1.8 petabits per second.

To give you an idea, only 786 terabits per second were predicted to transit via the global Internet last year. Nvidia Eos tightens more than twice as much, which is already a solid basis.

According to Nvidia, the new supercomputer will serve as a reference system for future AI development; however, Omniverse will also require smaller dedicated platforms for virtualization, even in tiny business worlds.

And it is precisely these digital twins that will be the focus of another new OVX server architecture, this time in the design of the server, the Pod, and the SuperPod. Lockheed Martin, for example, is already using it to simulate his own Omniverse.